Abstract

Event cameras show great potential for visual odometry (VO) in handling challenging situations, such as fast motion and high dynamic range.

Despite this promise, the sparse and motion-dependent characteristics of event data continue to limit the performance of feature-based or direct-based data association methods in practical applications.

To address these limitations, we propose Deep Event Inertial Odometry (DEIO), the first monocular learning-based event-inertial framework, which combines a learning-based method with traditional nonlinear graph-based optimization.

Specifically, an event-based recurrent network is adopted to provide accurate and sparse associations of event patches over time.

DEIO further integrates it with the IMU to recover up-to-scale pose and provide robust state estimation.

The Hessian information derived from the learned differentiable bundle adjustment (DBA) is utilized to optimize the co-visibility factor graph, which tightly incorporates event patch correspondences and IMU pre-integration within a keyframe-based sliding window.

Comprehensive validations demonstrate that DEIO achieves superior performance on 10 challenging public benchmarks compared with more than 20 state-of-the-art methods.

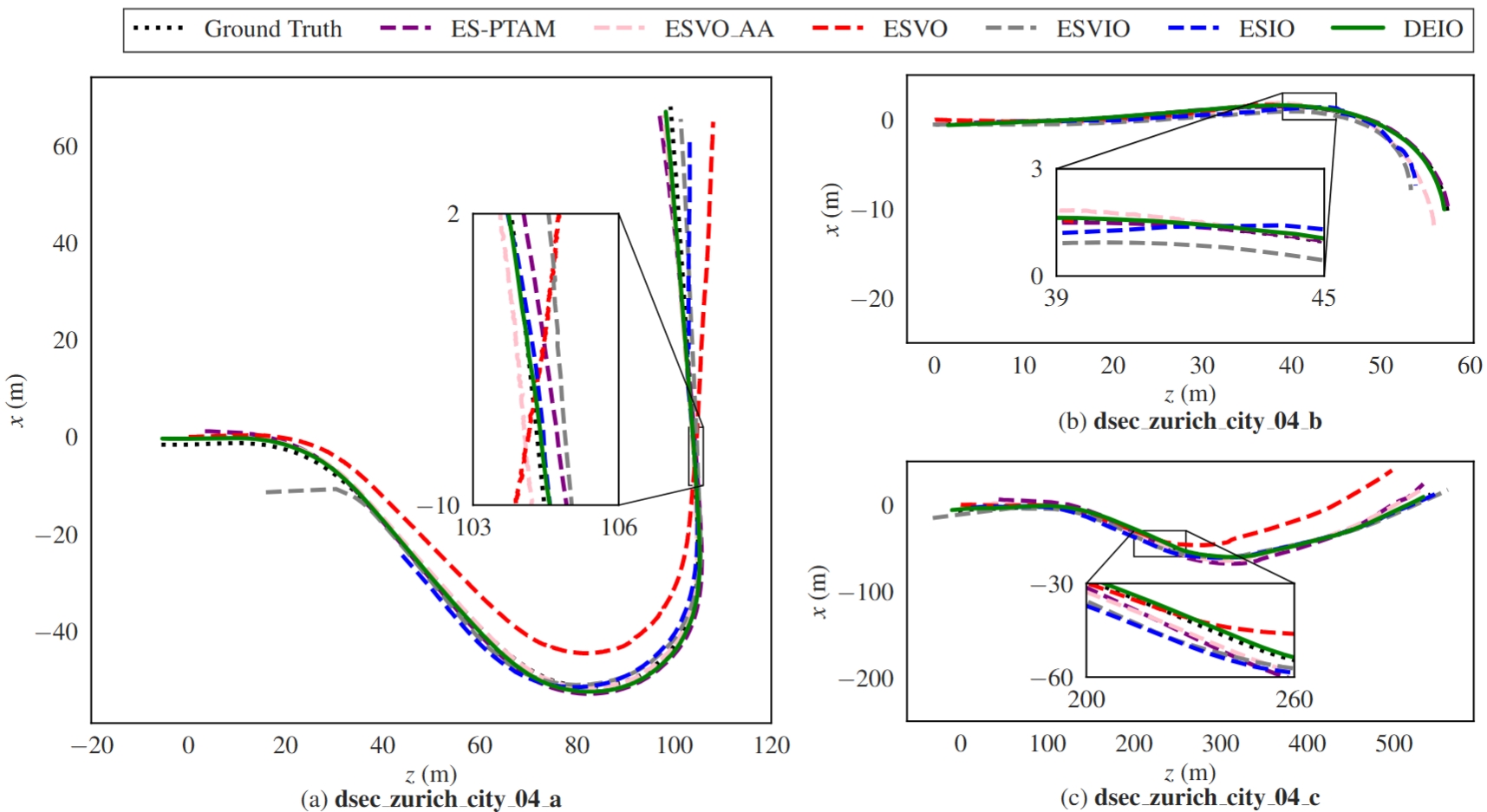

Qualitative Evaluation

| indoor_forward_7 in UZH-FPV | Drone Flight in Dark Scene |

| dynamic_6dof in DAVIS240c | boxes_6dof in DAVIS240c |

| HKU_agg_small_flip in Stereo-HKU | HKU_agg_tran in Stereo-HKU |

| Vicon_dark1 in Mono-HKU | Dense_street_night_easy_a in ECMD |

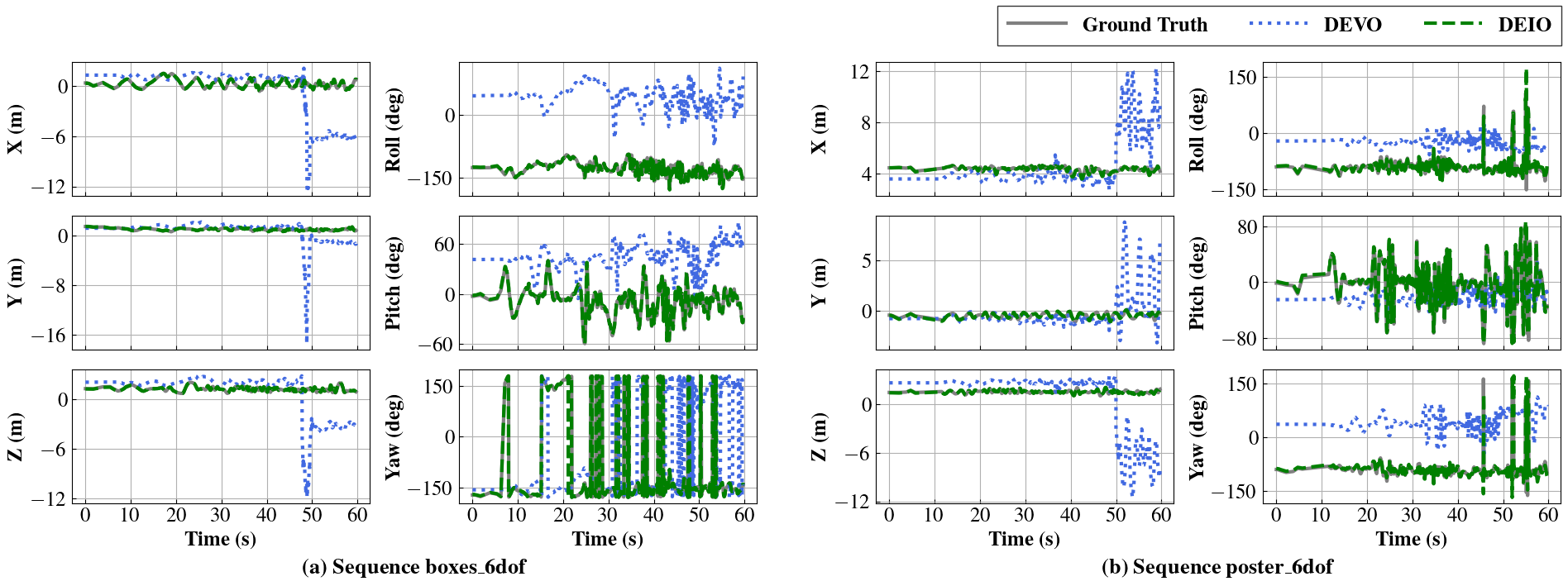

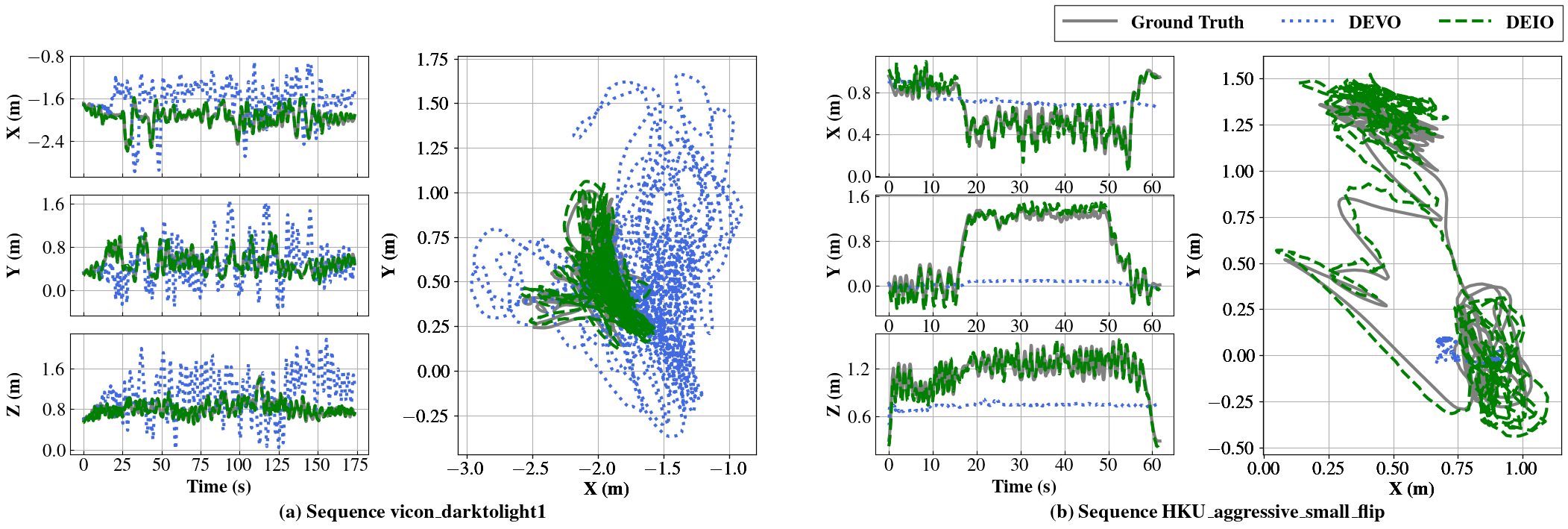

Qualitative Comparison Against GT

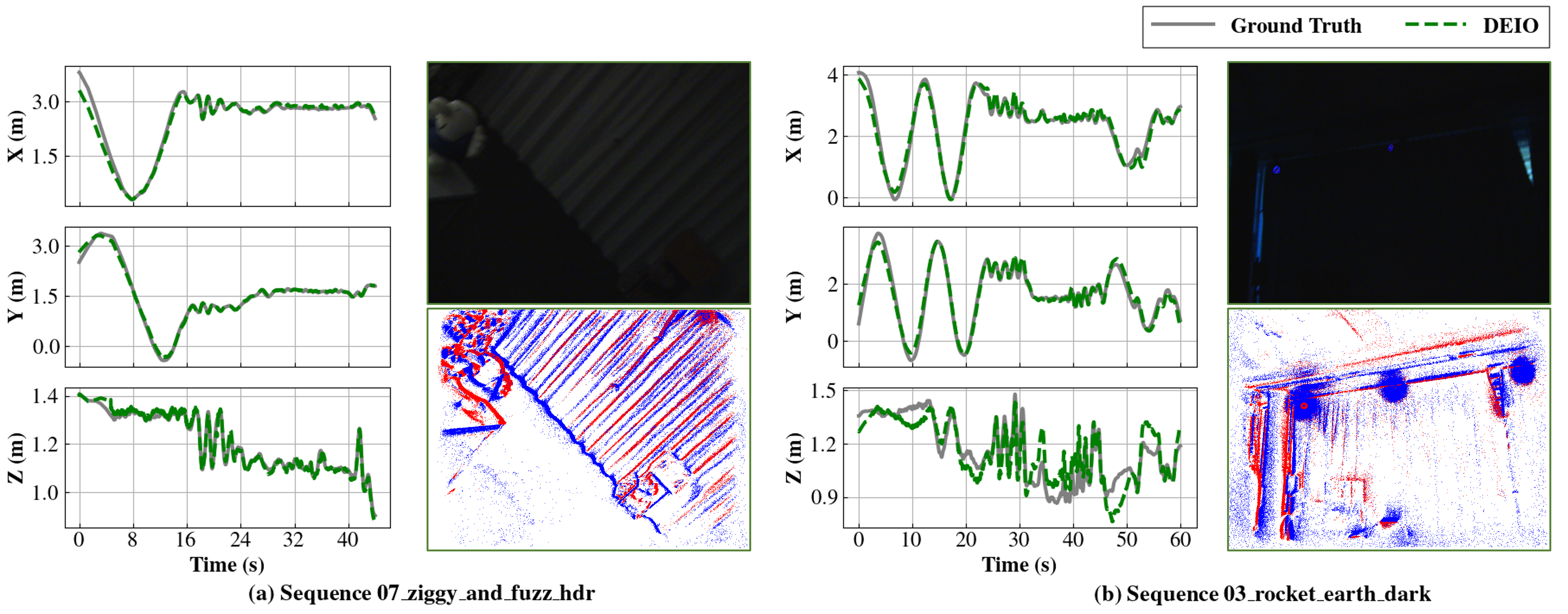

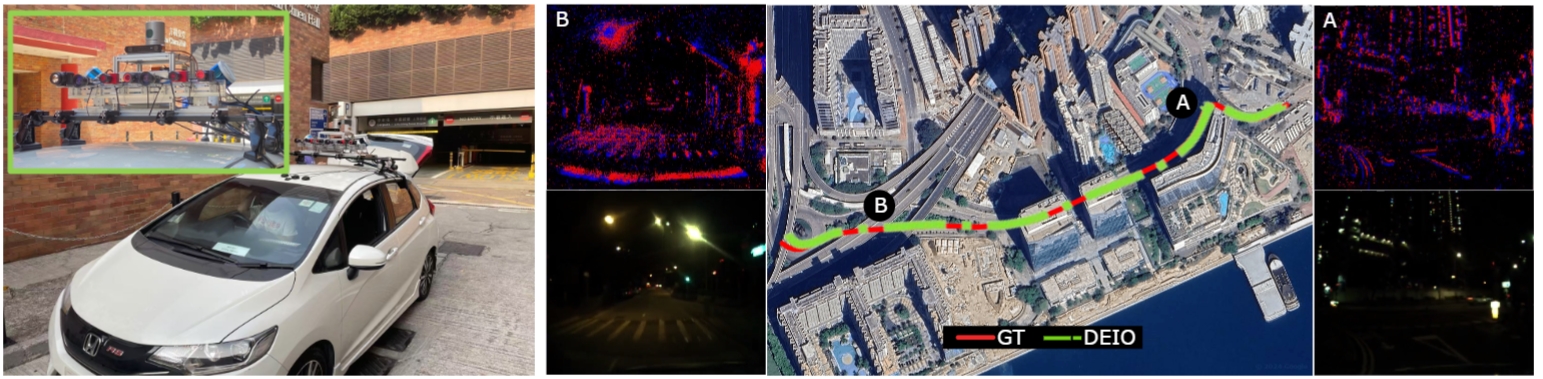

Evaluation in Night Driving Scenarios

Driving scenarios pose challenges for event-based state estimation, especially at nighttime, where rampant flickering light (e.g., from LED signs) generates an overwhelming number of noisy events. Additionally, the movement of vehicles, such as sharp turns, sudden stops, and other abrupt movements, can further complicate the event-based estimator. In this section, we select the Dense_street_night_easy_a sequences of the ECMD dataset, which feature numerous flashing lights from vehicles, street signs, buildings, and moving vehicles, making event-based SLAM more difficult. This dataset is recorded with two pairs of stereo event cameras (640 X 480 and 346 X 260) on a car driven through various road conditions such as streets, highways, roads, and tunnels in Hong Kong. Our DEIO runs on the event from the DAVIS346 and the IMU sensor, while the image frame output of the DAVIS346 is only used for illustration purposes. The following figure shows a small drift with a 4.7 m error of our estimated trajectory on the 620 m drive. To the best of our knowledge, we present the first results on pose tracking for night driving scenarios using event and IMU odometry. The earliest attempt in this area is ESVIO, which utilizes a combination of stereo events, stereo images, and IMU data, whereas DEIO operates with a monocular setup.

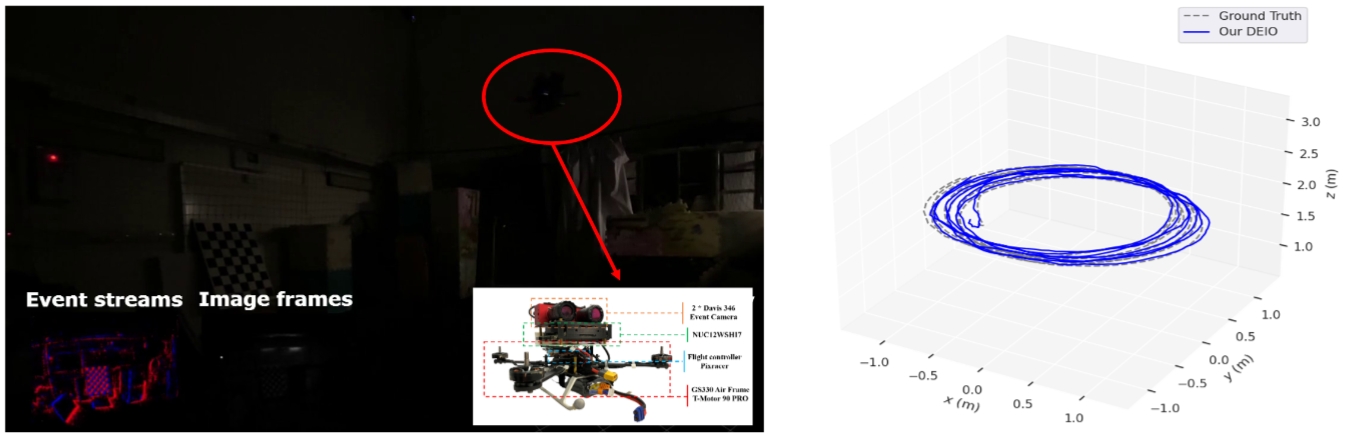

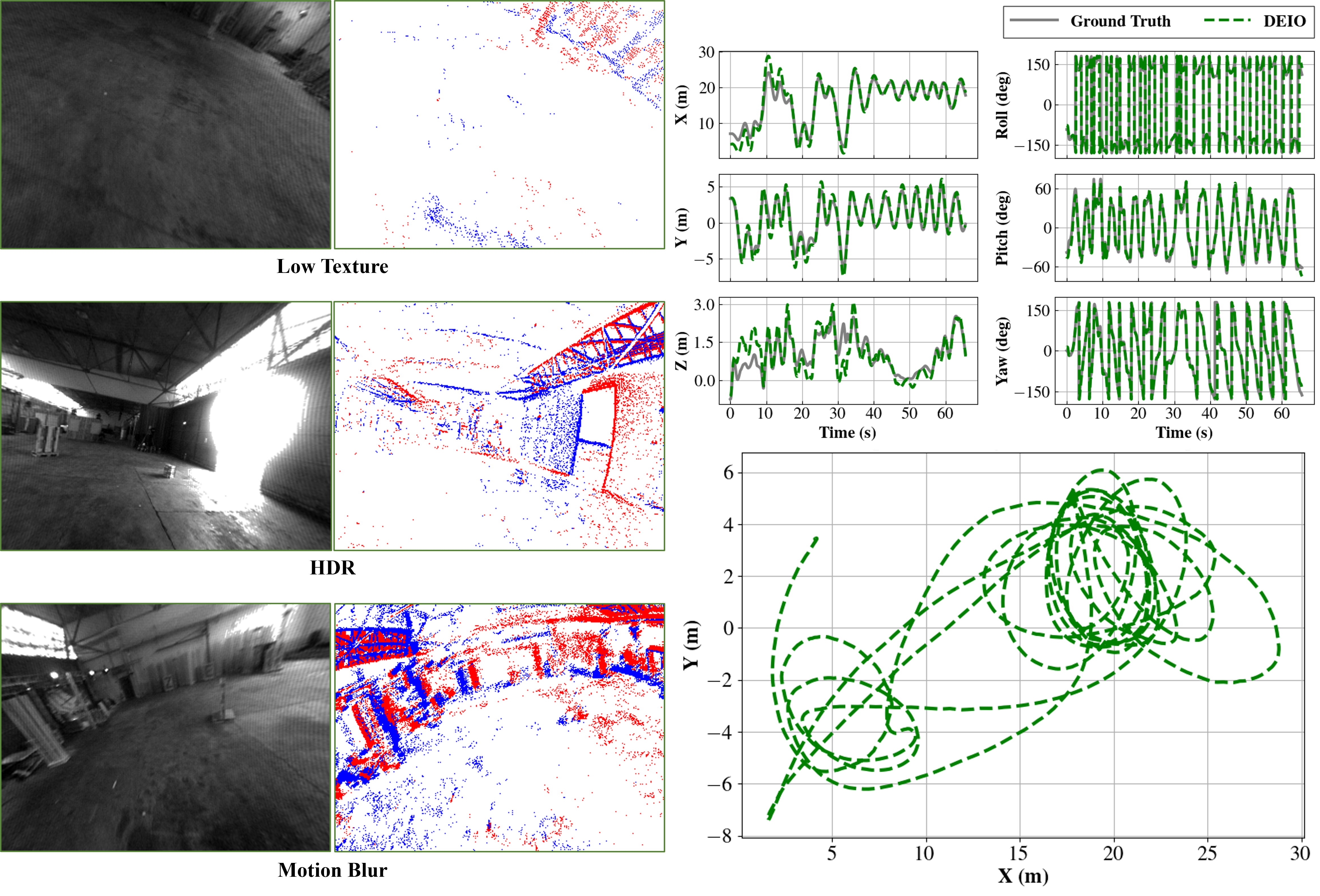

Evaluation in Dark Quadrotor-Flight

We evaluate our DEIO in a dark quadrotor flight experiment. The quadrotor is commanded to track a circle pattern with 1.5 m in radius and 1.8 m in height. The illuminance in the environment is quite low, resulting in minimal visual information captured by the onboard camera. The total length of the trajectory is 60.7 m, with an MPE of 0.15 and an average pose tracking error of 9 cm. % We further illustrate the estimated trajectories (translation and rotation) of our DEIO against the ground truth, as well as their corresponding errors. The translation errors in the X, Y, and Z dimensions are all within 0.5 m, while the rotation errors of the Roll and Pitch dimensions are within 60, and the one in the Yaw dimension is within 30. To the best of our knowledge, this is also the first implementation of monocular event-inertial odometry for pose tracking in dark flight environments, while the previous works rely on the image-aided event-IMU estimators.